Multimodal SRL

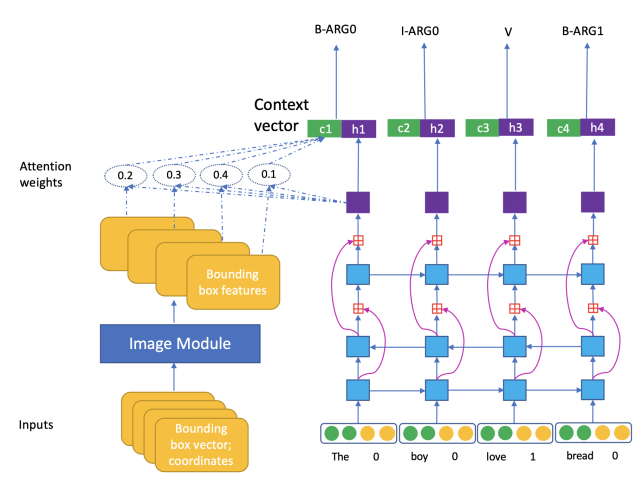

My master thesis explored how incorporating visual context can improve semantic role labeling. I used attention to include bounding box features into a standard Bi-LSTM’s hidden states in one approach. I also explored adding contrastive loss between sentence embedding and whole image feature to guide the training. Due to the lack of labeled data, I obtained semantic role labels automatically for image captioning datasets (MSCOCO, Flickr30k). Nonetheless, my attention approach trained on this automatically obtained dataset performs slightly better than a pure-text baseline. And additional analysis also suggests the improvement came from a better grounding of semantic roles into the image features. [Master_thesis][slides]

Conjecture:

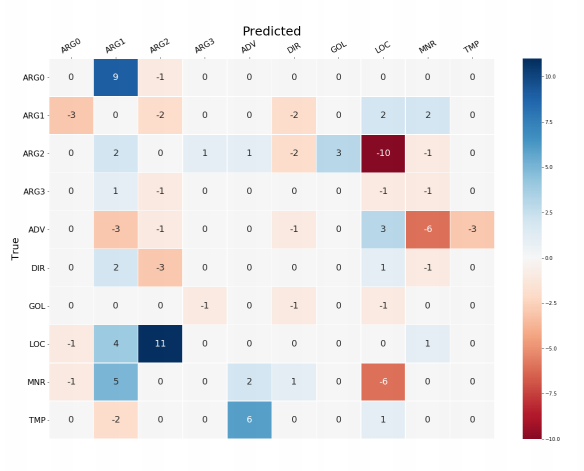

The attention model tends to correctly label location-related entities as location info is rich in image, so the attention model might effectively utilize that info from an image. Since ARG2 is known to be easily confused with LOC, DIR, (He et al) more location info might make it more difficult to not confuse ARG2 as LOC.